An MGM+FST single node SE is experience a recurring issue where the FST service fails, though the mgm service continues.

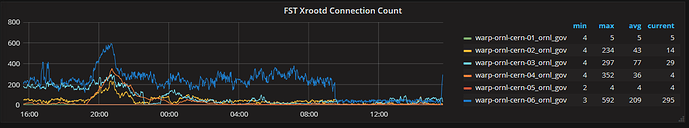

This SE is both receiving 3 party xfers from another SE at our facility, as well as serving data moved to it thus far.

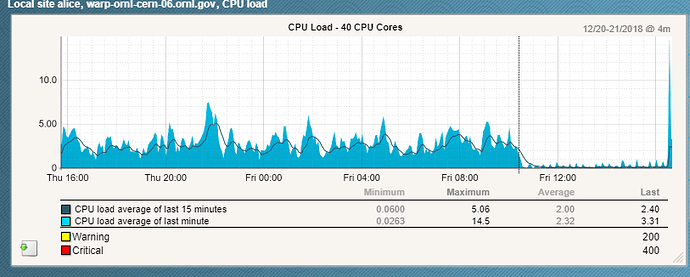

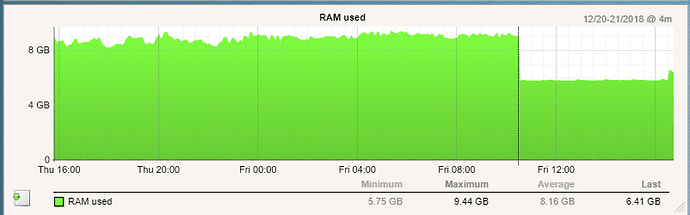

The system is not stressed during the xfers or serving, and a restart of eos brings everything back up fine. Monitoring data shows no issues with high load, memory exhaustion, or other anomalies when the FST service dies.

Are there any suggestions for how to increase debug level, where else to look, or what specifically to strace on to isolate the cause?

Cheers,

Pete

19:16:21 # service eos status

xrootd for role: mq (pid 92480) is running (as master) …

xrootd for role: sync (pid 92528) is running …

xrootd for role: mgm (pid 92576 92737) is running (as master) …

xrootd for role: fst dead but subsys locked

The FST log ends with no errs logged, just stat calls

19:18:03 # tail -n 2 /var/log/eos/fst/xrdlog.fst

181205 18:21:05 time=1544030465.255936 func=stat level=INFO logid=0013197a-f8b2-11e8-87fc-3cfdfe9f6254 unit=fst@warp-ornl-cern-06.ornl.gov:1095 tid=00007eff867ff700 source=XrdFstOfsFile:2546 tident=jalien.36699:842@aliendb06f.cern.ch sec= uid=10367 gid=1395 name=nobody geo=“” path=/07/09742/5476dfbc-d932-11e5-9340-1fa81892ec0e inode=13789970 size=2097152 mtime=1544030439.73649290

181205 18:21:05 time=1544030465.259123 func=stat level=INFO logid=fb860430-f8b1-11e8-9a2a-3cfdfe9f6254 unit=fst@warp-ornl-cern-06.ornl.gov:1095 tid=00007f00055fd700 source=XrdFstOfsFile:2546 tident=jalien.36631:218@aliendb06f.cern.ch sec= uid=10367 gid=1395 name=nobody geo=“” path=/01/16402/546f3b2a-a55b-11e8-af51-07462ce830b0 inode=13789917 size=12582912 mtime=1544030463.50811226

19:27:15 # tail -n 2 /var/log/eos/mgm/error.log

181205 18:21:03 time=1544030463.870356 func=CallManager level=ERROR logid=static… unit=fst@warp-ornl-cern-06.ornl.gov:1095 tid=00007eff569f6700 source=XrdFstOfs:886 tident= sec=(null) uid=99 gid=99 name=- geo=“” msg=“MGM query failed” opaque=“/?&mgm.access=create&mgm.ruid=10367&mgm.rgid=1395&mgm.uid=10367&mgm.gid=1395&mgm.path=/eos/aliceornlxfer/grid/14/22101/5477bb72-b4d0-11e8-ad9f-b390bad9fd43&mgm.manager:1094=warp-ornl-cern-06.ornl.gov&mgm.fid=00d26b91&mgm.cid=11949&mgm.sec=unix|alienmaster|alientest02.cern.ch||alienmaster|||transfer-3rd&mgm.lid=1048578&mgm.bookingsize=580505&mgm.targetsize=580505&mgm.fsid=6040&cap.valid=1544034060&mgm.pcmd=commit&mgm.size=1048576&mgm.checksum=2dda6ab5&mgm.mtime=1544030463&mgm.mtime_ns=869154000&mgm.modified=1&mgm.add.fsid=6040&mgm.commit.size=1&mgm.commit.checksum=1&mgm.logid=22d8d076-f8b2-11e8-aff9-3cfdfe9f6254”

181205 18:21:04 time=1544030464.490708 func=DoTpcTransfer level=ERROR logid=865f0d32-f8b1-11e8-b375-3cfdfe9f6254 unit=fst@warp-ornl-cern-06.ornl.gov:1095 tid=00007eff2bfff700 source=XrdFstOfsFile:2398 tident=alienmas.20568:337@aliendb06e.cern.ch sec= uid=10367 gid=1395 name=nobody geo=“” msg=“tpc transfer terminated - remote read failed"19:27:15 # tail -n 2 /var/log/eos/mgm/error.log

181205 18:21:03 time=1544030463.870356 func=CallManager level=ERROR logid=static… unit=fst@warp-ornl-cern-06.ornl.gov:1095 tid=00007eff569f6700 source=XrdFstOfs:886 tident= sec=(null) uid=99 gid=99 name=- geo=”" msg=“MGM query failed” opaque=“/?&mgm.access=create&mgm.ruid=10367&mgm.rgid=1395&mgm.uid=10367&mgm.gid=1395&mgm.path=/eos/aliceornlxfer/grid/14/22101/5477bb72-b4d0-11e8-ad9f-b390bad9fd43&mgm.manager:1094=warp-ornl-cern-06.ornl.gov&mgm.fid=00d26b91&mgm.cid=11949&mgm.sec=unix|alienmaster|alientest02.cern.ch||alienmaster|||transfer-3rd&mgm.lid=1048578&mgm.bookingsize=580505&mgm.targetsize=580505&mgm.fsid=6040&cap.valid=1544034060&mgm.pcmd=commit&mgm.size=1048576&mgm.checksum=2dda6ab5&mgm.mtime=1544030463&mgm.mtime_ns=869154000&mgm.modified=1&mgm.add.fsid=6040&mgm.commit.size=1&mgm.commit.checksum=1&mgm.logid=22d8d076-f8b2-11e8-aff9-3cfdfe9f6254”

181205 18:21:04 time=1544030464.490708 func=DoTpcTransfer level=ERROR logid=865f0d32-f8b1-11e8-b375-3cfdfe9f6254 unit=fst@warp-ornl-cern-06.ornl.gov:1095 tid=00007eff2bfff700 source=XrdFstOfsFile:2398 tident=alienmas.20568:337@aliendb06e.cern.ch sec= uid=10367 gid=1395 name=nobody geo=“” msg=“tpc transfer terminated - remote read failed”

Node config:

19:21:45 # eos node status warp-ornl-cern-06.ornl.gov

# ------------------------------------------------------------------------------------

# Node Variables

# ....................................................................................

debug.level := info

debug.state := info

domain := MGM

gw.ntx := 10

gw.rate := 120

http.port := 8001

manager := warp-ornl-cern-06.ornl.gov:1094

stat.active := online

stat.balance.ntx := 2

stat.balance.rate := 25

stat.geotag := CADES_ah75

stat.hostport := warp-ornl-cern-06.ornl.gov:1095

stat.net.ethratemib := 1192

stat.net.inratemib := 75.3088

stat.net.outratemib := 0.38199

stat.publishtimestamp := 1544030453453

stat.sys.eos.start := Sun Dec 2 15:44:13 2018

stat.sys.eos.version := 4.3.12-1

stat.sys.kernel := 2.6.32-696.30.1.el6.x86_64

stat.sys.keytab := dc952481

stat.sys.rss := 5472841728

stat.sys.sockets := 287

stat.sys.threads := 540

stat.sys.uptime := 18:20:53 up 40 days, 36 min, 0 users, load average: 5.69, 8.29, 9.04

stat.sys.vsize := 12086636544

stat.sys.xrootd.version := v4.8.3

status := on

symkey := oEQCcSPax7TERhIpi6kXAYehx40=

txgw := off